AI offers huge opportunities for Australian business. Is your board prepared for self programming machines, and digital decision making?

Could that be a light at the end of the tunnel or perhaps a rapidly approaching train? Whatever metaphor you prefer, Australia, it’s time to get serious. What is needed is to bridge the knowing/doing gap.

Artificial intelligence (AI) has been around for decades, but thanks to the forces of computational power and the capacity to use data to train the algorithms, Catriona Wallace, CEO/founder of Flamingo AI, and a speaker at the Australian Governance Summit in March, says it is the most powerful transformation to affect business and society since the invention of electricity. She says the primary reason why there is such an emphasis on AI is the recognised decline in the ability of traditional levers of production, capital investment and labour, to propel economic growth.

With this in mind, it is time for boards to step up and start to learn about AI — its uses, frameworks and shortcomings — and build an AI strategy to amplify or augment the traditional operational model.

At a recent forum, Microsoft Australia chief executive Steven Worrall cited International Data Corporation research that 80 per cent of senior executives and directors, including in Australia, saw AI as playing an important part of their business competitiveness over the next three years — and as an enabler. However, 17 per cent said they have not yet started to consider AI as part of their company’s strategy.

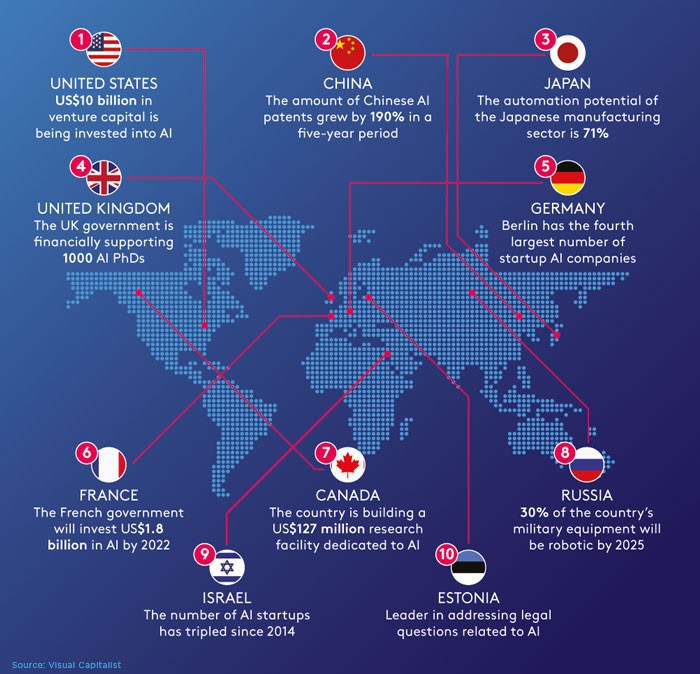

Why such a big gap? Worrall sees the primary impediment as skills and knowledge. “We are in a race, let’s make no mistake about that,” he says. “China has made it clear it expects to be the leader in the world of AI by 2030 so the imperative for us to act as a nation has never been clearer.”

Ash Fontana, managing director at Zetta Venture Partners, told the 2019 Australian Governance Summit in March that AI allows companies to increase efficiency through automation and enjoy a significant competitive advantage over those companies that fail to make the transition. Fontana said this era requires companies and their boards to manage new categories of risk and allocate capital to new categories of assets.

“Data assets, infrastructure and talent are the assets to build,” says Fontana. “Boards that help management manage those risks and accumulate those assets will not only put themselves in a safe position, but put their companies in a position to dominate the next era of technology-driven progress.”

The AICD’s Technology Governance and Innovation Panel is made up of directors and executives with relevant experience in Australia and globally. The panel advises the AICD on its work in supporting directors to govern technology and innovation. Chair Kee Wong FAICD says the aim is to help members to put technology and innovation more front-of-mind in their businesses and across sectors.

Boards need to prepare for AI

When the Commonwealth Bank appointed cultural anthropologist Professor Genevieve Bell as a non-executive director, it rang the loudest signal yet that AI is unleashed.

A few weeks before joining the CBA board in December, Professor Genevieve Bell stood in front of 7000 of the world’s leading bankers gathered in Sydney for the Sibos conference. Her aim was to put them on notice about the impact the fourth industrial revolution — turbocharged by AI and machine learning (ML) — would have. Captains of industry, she said, needed to consider what technologies, skills, regulations, checks and balances they will need to be prepared for in the world that is coming.

“It might have taken us 250 years to get here, but we don’t have that much time,” said Bell, a cultural anthropologist, technologist, and futurist, a director of the 3A Institute at the Australian National University and a senior fellow at Intel.

“If you think ‘the fourth industrial revolution doesn’t apply to me’, you’re wrong. It may not apply today or tomorrow, you may get a few more years before you have to start thinking about the consequences, but questions about whether the things you build will be autonomous and who gets to decide what their limits and controls are, how we will engage with them, how we will measure them and how we will make them safe — those are questions not for technologists, but for all of us.”

According to analyst Gartner, the global business value from AI rose to US$1.2 trillion last year and will soar to US$3.9 trillion by 2022 as companies use AI to improve customer experience, cut costs and open fresh streams of revenue with new products and services. Financial services are among the most enthusiastic early adopters along with retail, health, energy and government.

Liesl Yearsley is CEO and co-founder of Akin, a San Francisco-based company working to develop ethical AI. She sold her Australian AI business to IBM Watson in 2014. Speaking at the Spark Festival’s National Future Work Summit, Yearsley forecast that “artificial intelligence is going to change more in the next 10 years than we have seen in the last 1000”.

She says the question to consider is, “How are we going to work with AI? We need to think a little more about who is going to control our future — who will decide which systems have the most influence over us? What if 60 per cent of us did become redundant — how would we fill our time?”.

AI and ML, designed intelligently and deployed sensitively, herald immense opportunity. But the technology is not without risk. Flawed algorithms and biased data sets can lead to unintended outcomes while increased automation will likely reduce the need for employees engaged in repetitive work.

Lyn Nicholson, general counsel at law firm Holding Redlich, notes that at present “the approach we are all taking to software and AI is in many ways the complete opposite of the approach we take to pharmaceuticals and drugs”.

“We don’t just put a drug out there and then work out if it has bad side effects,” says Nicholson. “But the approach with software has always been that we will test it to a certain point, we will put it out there and then, if problems come, we will patch them. As software becomes more complicated and morphs into AI, we need to take a slightly different approach.”

This becomes more pertinent as AI percolates critical infrastructure, such as electricity networks.

“There seems, in business, to be an increasing pace to innovation — introducing new technologies because there are competitive forces to keep up with the Joneses — that may drive some things being implemented too soon. That leaves directors and senior managers in a very difficult position,” says Nicholson, adding many will need to boost their understanding of technology and AI.

“You need to know enough to ask intelligent questions. The Corporations Act 2001 (Cth), in section 189, says directors and executives can rely on experts giving them advice — but directors and execs are still ultimately responsible for their own decisions.”

Ethical issues

Dr Simon Longstaff AO, executive director of the Ethics Centre, says the priority for directors is to properly understand the general ethical position of the business and be clear about its purpose, values and principles. “Because what you do with AI can’t be something that’s treated in isolation,” he says.

He also recommends using an explicit ethical framework for the way AI and ML is developed and deployed. Last year, The Ethics Centre published its own ethical framework, which lays out the seven guiding principles of good technology design — as well as an overarching reminder that just because something can be done, it does not mean that it should.

“Particularly in the case of AI, given its latent power, you need to wed technical mastery with ethical restraint — across a number of stakeholder groups,” says Longstaff.

Directors also need to consider the human impact of AI. “Organisations may need significantly fewer flesh-and-blood people to be employed,” says Longstaff. “How you go about managing that transition to be just and orderly becomes an ethical question for directors.”

One particular challenge directors need to address is considering the unforeseen consequences of AI, says Longstaff. “There are certain forms of neural networks, unsupervised ones, where the basic principles are known, but the specific routes they take to bring about the effects they generate are either unknown or unknowable. That means directors are going to be in the same position as ministers — responsible without necessarily being culpable.”

There have been plenty of corporate examples of AI going awry. In the US, Amazon abandoned an AI-infused recruiting system that was biased against women. Microsoft ditched its chatbot, Tay, after it started posting offensive tweets. And an AI-infused system used to advise US judges about the risk of recidivism to guide them in sentencing and parole applications was found to be biased against people of colour. In all those cases, it was the data set the AI system “learned” from that was at fault. While Longstaff acknowledges directors cannot be expected to understand the often arcane intricacies of every ML algorithm or AI platform deployed, there is no excuse for poor data.

“Directors are entirely able to be responsible for the quality of the data,” he says. “There are a number of dimensions — completeness, accuracy, absence of bias — particularly around the quality of consent by those whose data is being aggregated.”

The not-for-profit Gradient Institute was formed in December by CSIRO’s Data61, IAG and the University of Sydney to work with business on the design of ethical AI. CEO Bill Simpson-Young says directors need to remember that “machine learning does what you tell it to do”.

“If you ask for something, you will get that thing,” says Simpson-Young. “If you ask it to maximise profit, it will maximise profit at all cost.” He compares AI to “a genie that is all powerful — it will do what you ask for, but be careful what you wish for”.

The Gradient Institute is focused on creating open-source tools that will be widely available to help companies develop ethical AI systems.

“Machines, in principle, can make much better decisions than humans,” says Simpson-Young. But they must be designed properly, with the right constraints and accurate unbiased data to work with. That demands proper human oversight.

“You should know what your algorithms are doing and all companies should have an ethical framework that they operate with,” he says.

“You don’t need to understand how AI works; you need to understand the purpose of the algorithm, what is it trying to achieve. And directors need to know what they are trying to achieve — and that includes the constraints.”

What is AICD doing?

- AI Toolkit The AICD is looking for companies to pilot an AI Toolkit in development by the World Economic Forum. The toolkit aims to help corporate boards identify the specific benefits and challenges of AI for their companies, and concrete ways to design, develop, and deploy it in an ethical way. The pilot will run June–December ahead of its full release in early 2020.

- Benchmarking In 2019, the AICD will be conducting research aimed at examining how directors approach the issue of innovation within their organisations, and how Australia measures up against its international peers. The research will help establish a baseline of current attitudes and practices that will allow the AICD to monitor progress over time.

- The AICD Technology Governance and Innovation Panel was set up in early 2018.

- Directors Playbook: The Future of Work, was released jointly by the AICD Governance Leadership Centre and Deloitte in 2018.

- AICD has become a member of the World Economic Forum Centre for the Fourth Industrial Revolution, a global network for technology governance.

Latest news

Already a member?

Login to view this content